Palantir AIP

Palantir AIP connects generative AI to operations. Together with Foundry - Palantir's data operations platform - and Apollo - Palantir's mission control for autonomous software deployment, AIP is part of an AI Mesh that can deliver the full gamut of AI-driven products, from LLM-powered web applications to mobile applications using vision-language models to edge applications that embed localized AI. We call this entire set of capabilities, functionality, and tooling the Palantir platform.

Architecture

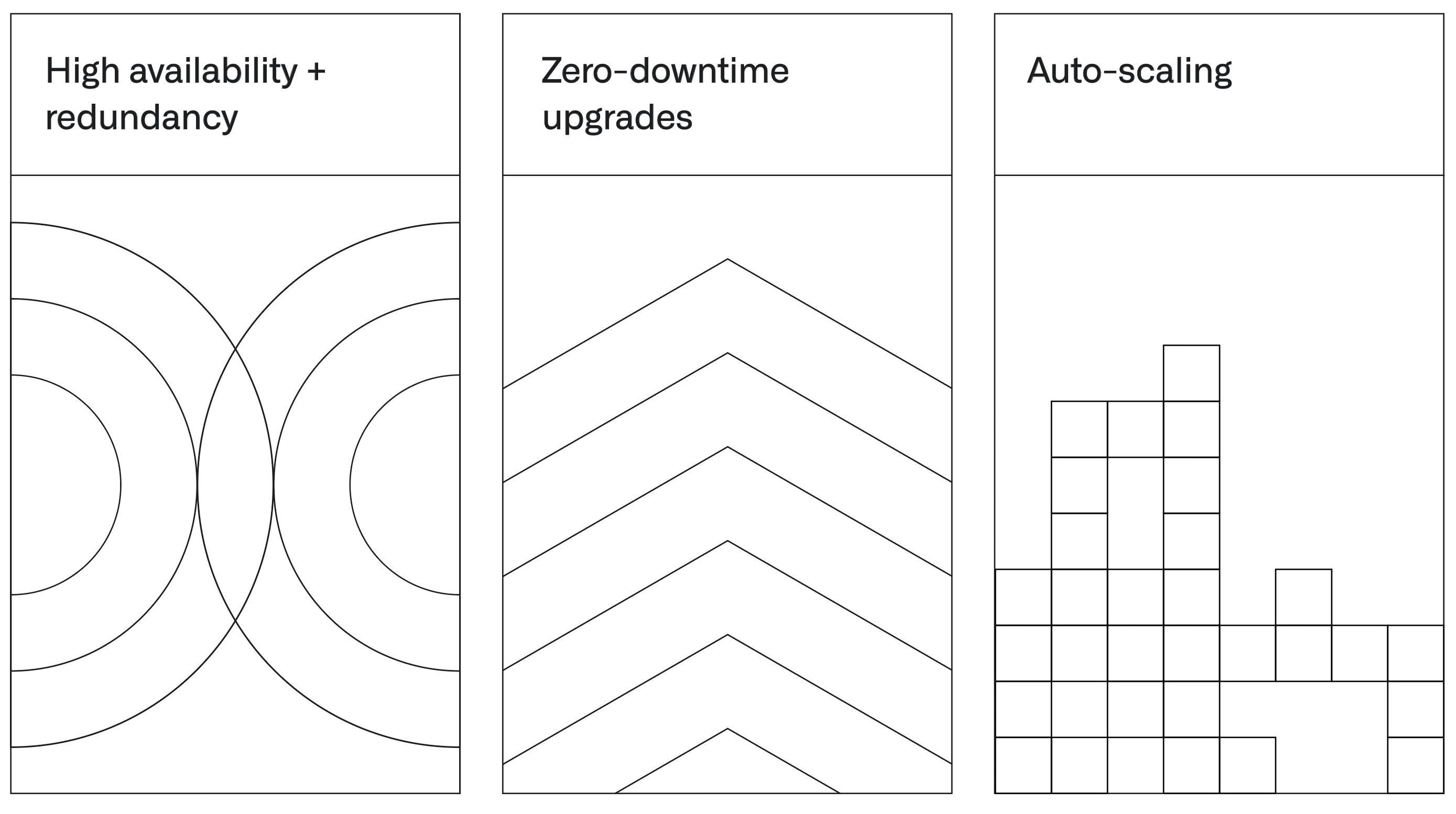

Each of the hundreds of services within the platform run in a highly-available, redundant configuration. Beyond core backend services, this also includes front-end application services, analytics tools, application builders, and each constituent service used by each type of user.

All service upgrades are performed in a zero-downtime capacity, with granular monitoring that informs how upgrade strategies are deployed, monitored, and potentially rolled back. With Apollo as the backbone for all service orchestration fleet-wide, the level of secure automation far exceeds what is possible through manual or bespoke operations.

Auto-scaling across both the core services and the associated compute mesh leverage a consistent containerization paradigm. This is achieved through the Rubix engine, which underpins all of the platform’s autoscaling infrastructure — and works in lockstep with the Apollo delivery platform.

All service upgrades are performed in a zero-downtime capacity, with granular monitoring that informs how upgrade strategies are deployed, monitored, and potentially rolled back. With Apollo as the backbone for all service orchestration fleet-wide, the level of secure automation far exceeds what is possible through manual or bespoke operations.

Auto-scaling across both the core services and the associated compute mesh leverage a consistent containerization paradigm. This is achieved through the Rubix engine, which underpins all of the platform’s autoscaling infrastructure — and works in lockstep with the Apollo delivery platform.

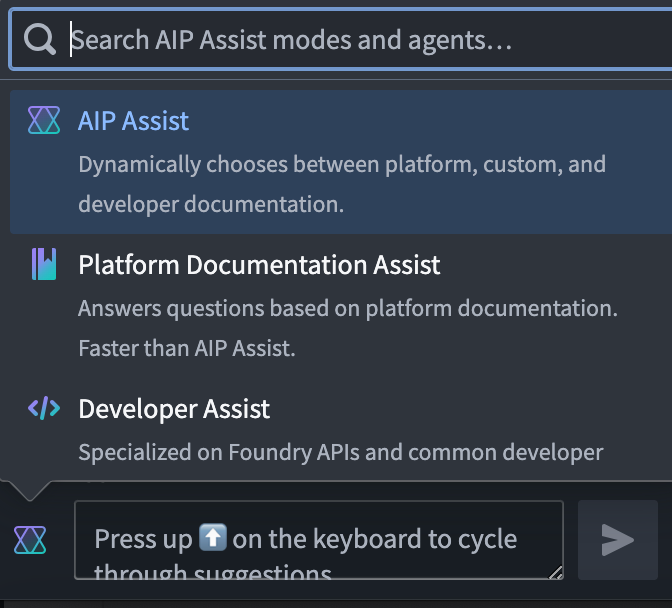

AIP Assist

AIP Assist is an LLM-powered support tool designed to help users navigate, understand, and generate value with the Palantir platform. Users can ask AIP Assist questions in natural language and receive real-time help with their queries.

User-friendly interface: Powered by LLMs, AIP Assist has an intuitive interface that makes it easy for users to ask questions and receive relevant, natural, and easy-to-understand responses.

Real-time assistance: AIP Assist provides real-time assistance to empower users to resolve issues and queries quickly, improving user productivity while reducing dependency on support teams.

Multi-language support: AIP can respond to queries in all common languages. Context awareness: Designed to maintain context of the conversation, AIP Assist is aware of what Foundry application you are in.

Foundry-grade security: AIP Assist fully respects Palantir’s AI Ethics Principles ↗ and does not access your data.

Iterative improvements: Users can provide feedback on the quality of AIP Assist responses to help improve the tool as development continues.

User-friendly interface: Powered by LLMs, AIP Assist has an intuitive interface that makes it easy for users to ask questions and receive relevant, natural, and easy-to-understand responses.

Real-time assistance: AIP Assist provides real-time assistance to empower users to resolve issues and queries quickly, improving user productivity while reducing dependency on support teams.

Multi-language support: AIP can respond to queries in all common languages. Context awareness: Designed to maintain context of the conversation, AIP Assist is aware of what Foundry application you are in.

Foundry-grade security: AIP Assist fully respects Palantir’s AI Ethics Principles ↗ and does not access your data.

Iterative improvements: Users can provide feedback on the quality of AIP Assist responses to help improve the tool as development continues.

Ecosystem

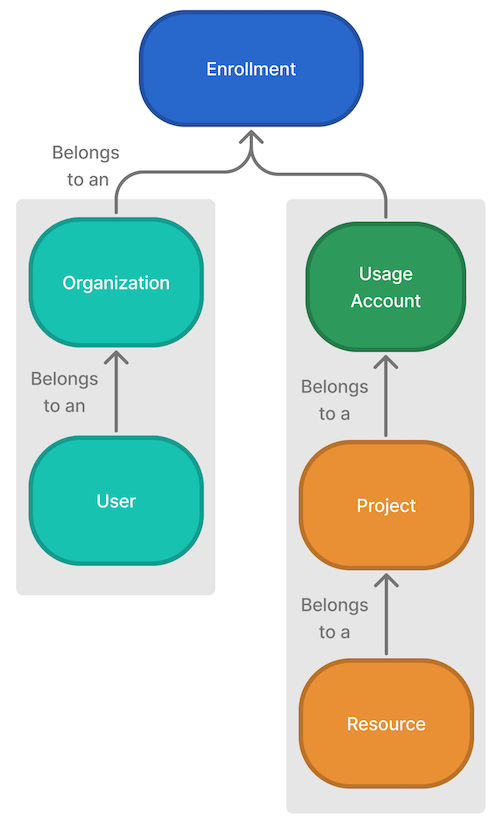

Enrollment: An enrollment is the primary identity of your Organization and establishes your company’s identity with Foundry services and the Foundry platform.

Organization: Organizations are access requirements applied to Projects that enforce strict silos between groups of users and resources. Every user is a member of one Organization, but can be a guest member of multiple Organizations. Learn more about Organizations.

Project: A Project is a collaborative space that brings together users, files, and folders for a particular purpose. Projects are the primary security boundary in Foundry and can be thought of as buckets of shared work. Learn more about Projects.

Resource: There are two potential uses of the term “resource” when working with Foundry. Service-level resources like CPU cores, virtual machines, and databases power the computation and data processing on the Foundry platform. Foundry resources sit above the service-level resources and include data concepts and structures such as Projects, workbooks, ontologies, and datasets. Most Foundry resources incur some form of service-level resource usage when users interact with them (e.g. by rebuilding a dataset).

Ontology and Ontology resources: The Foundry Ontology is an Organization’s digital twin; a rich semantic layer that sits on top of the digital assets integrated into Foundry. Ontology resources, namely object types and link types, are the building blocks, or “primitives”, of an Ontology. Ontology usage is attributed to the Project of each object’s input datasource. Learn more about the Ontology.

Usage account: A usage account combines and reports the usage incurred in a group of one or more Projects, typically for accounting purposes.

Project label: A project label supports grouping Projects for ad-hoc usage analysis.

User: A user is an individual who has been authenticated and has access to Foundry. As users interact with the platform, they incur usage that can be tracked within the Resource Management app

Organization: Organizations are access requirements applied to Projects that enforce strict silos between groups of users and resources. Every user is a member of one Organization, but can be a guest member of multiple Organizations. Learn more about Organizations.

Project: A Project is a collaborative space that brings together users, files, and folders for a particular purpose. Projects are the primary security boundary in Foundry and can be thought of as buckets of shared work. Learn more about Projects.

Resource: There are two potential uses of the term “resource” when working with Foundry. Service-level resources like CPU cores, virtual machines, and databases power the computation and data processing on the Foundry platform. Foundry resources sit above the service-level resources and include data concepts and structures such as Projects, workbooks, ontologies, and datasets. Most Foundry resources incur some form of service-level resource usage when users interact with them (e.g. by rebuilding a dataset).

Ontology and Ontology resources: The Foundry Ontology is an Organization’s digital twin; a rich semantic layer that sits on top of the digital assets integrated into Foundry. Ontology resources, namely object types and link types, are the building blocks, or “primitives”, of an Ontology. Ontology usage is attributed to the Project of each object’s input datasource. Learn more about the Ontology.

Usage account: A usage account combines and reports the usage incurred in a group of one or more Projects, typically for accounting purposes.

Project label: A project label supports grouping Projects for ad-hoc usage analysis.

User: A user is an individual who has been authenticated and has access to Foundry. As users interact with the platform, they incur usage that can be tracked within the Resource Management app

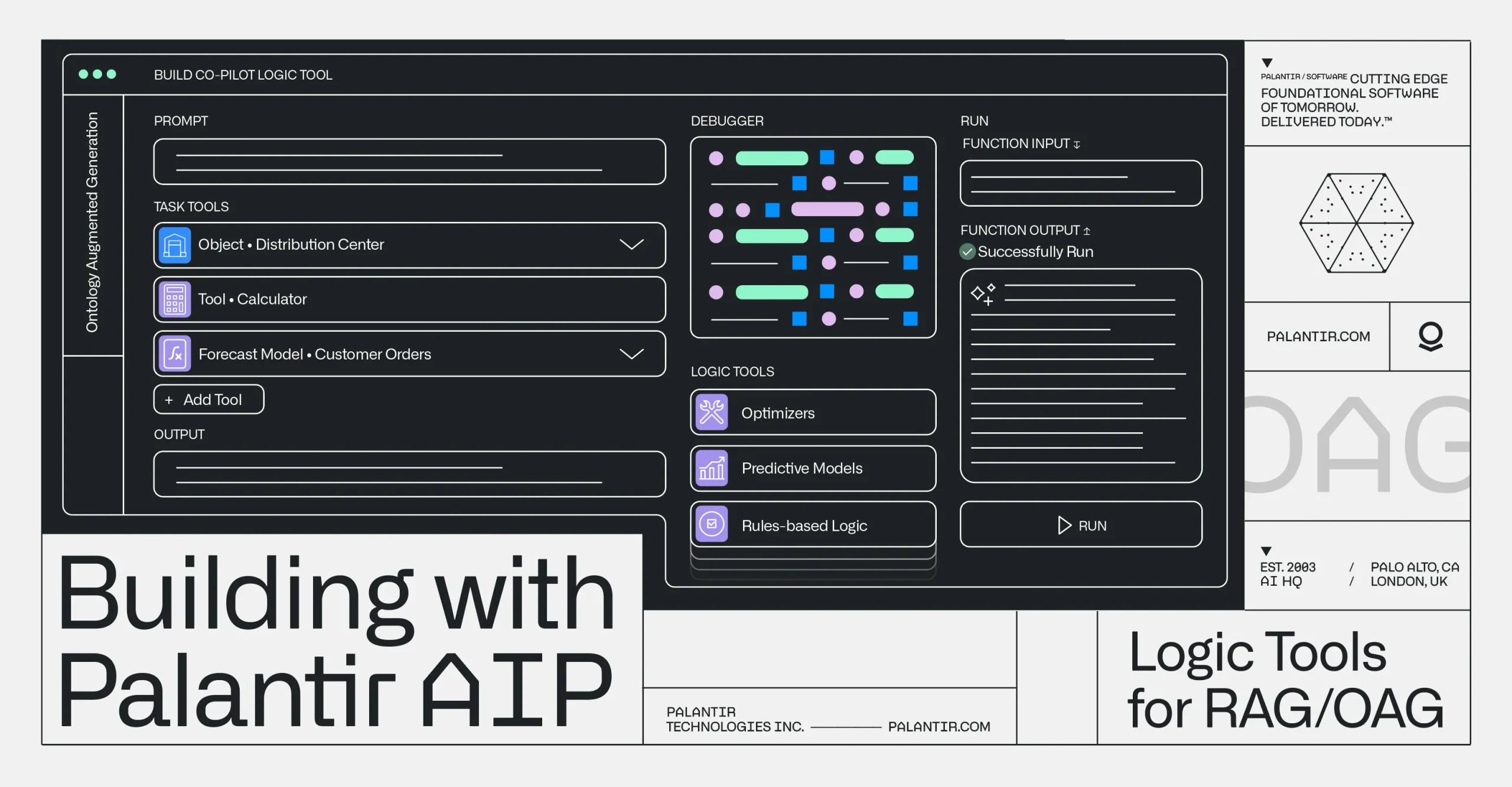

AIP Logic

AIP Logic is a no-code development environment for building, testing, and releasing functions powered by LLMs. AIP Logic enables you to build feature-rich AI-powered functions that leverage the Ontology without the complexity typically introduced by development environments and API calls. Using Logic’s intuitive interface, application builders can engineer prompts, test, evaluate and monitor, set up automation, and more.

You can use AIP Logic to automate and support your critical tasks, whether connecting key information from unstructured inputs to your Ontology, resolving scheduling conflicts, optimizing asset performance by finding the best allocation, reacting to disruptions in your supply chain, or more.

AIP Logic provides an intuitive interface to leverage the Ontology and LLMs via a Logic function that takes inputs (like Ontology objects or text strings) and can return an output (objects and/or strings) or make edits to the Ontology. For example, the LLM-powered function below takes input data from an Ontology object and cross-references that data with a customer email to recommend a solution for a given issue based on previous resolutions.

You can use AIP Logic to automate and support your critical tasks, whether connecting key information from unstructured inputs to your Ontology, resolving scheduling conflicts, optimizing asset performance by finding the best allocation, reacting to disruptions in your supply chain, or more.

AIP Logic provides an intuitive interface to leverage the Ontology and LLMs via a Logic function that takes inputs (like Ontology objects or text strings) and can return an output (objects and/or strings) or make edits to the Ontology. For example, the LLM-powered function below takes input data from an Ontology object and cross-references that data with a customer email to recommend a solution for a given issue based on previous resolutions.